- Primary Target Group

- Alternative Target Group

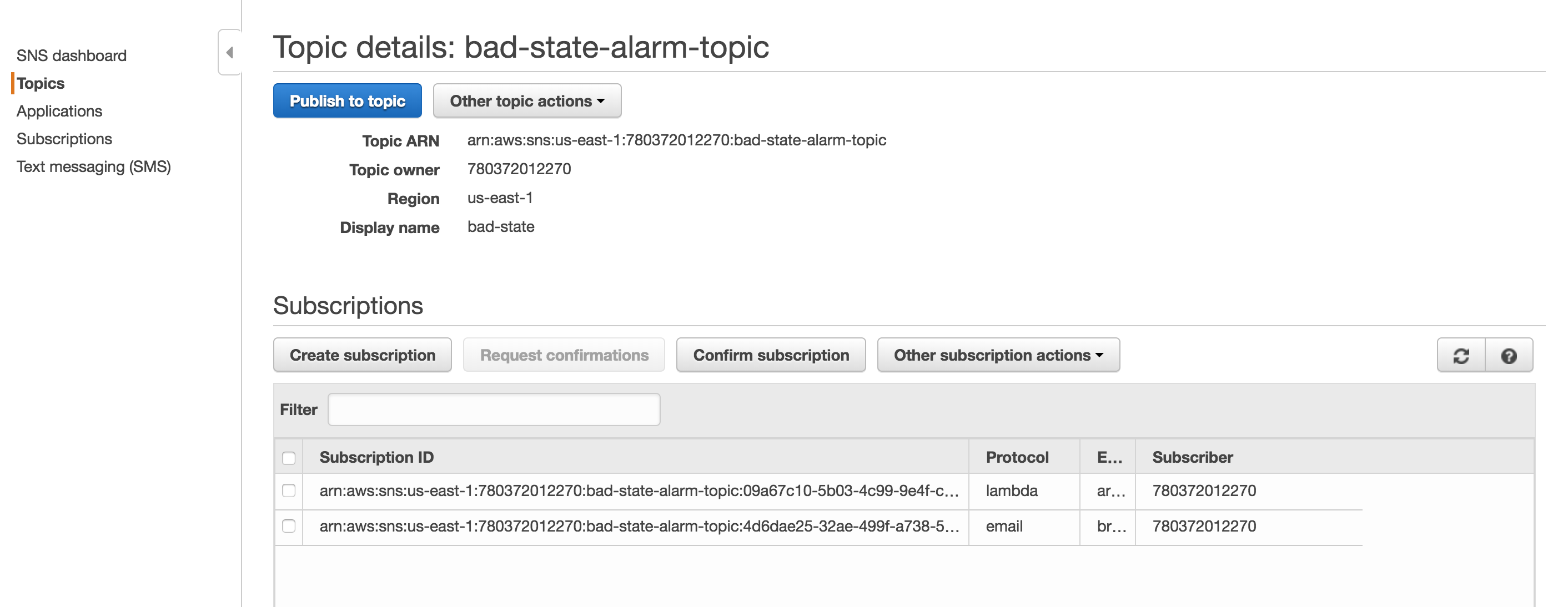

For the magic of automatic failover to occur, we need a lambda function to swap out the target groups and we something to trigger the lambda function. The trigger is going to be a Cloudwatch Alarm that sends a notification to SNS. When the SNS notification is triggered, the lambda function will run. First, you will need an SNS Topic. My screenshot already has the Lambda function already bound, but this will happen automatically as you go through the Lambda function set up. Screenshot of SNS Notification.

Second, create a Cloudwatch alarm like the one below. Make sure to select the topic configured previously. The Cloudwatch Alarm will trigger when there are less than 1 healthy hosts. Screenshot of the Cloudwatch Alarm [gallery ids="436,437" type="rectangular"] Third, we finally get to configure the Lambda function. You must ensure that your lambda function has sufficient permissions to make updates to the ALB. Below is the JSON for an IAM role that will allow the Lambda function to make updates to any ELB.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "elasticloadbalancing:*",

"Resource": "*"

}

]

}

from __future__ import print_function

import boto3

print('Loading function')

client = boto3.client('elbv2')

def lambda_handler(event, context):

try:

response_80 = client.modify_listener(

# This is the HTTP (port 80) listener

ListenerArn = 'arn:aws:elasticloadbalancing:region:id:listener/app/alb/id/id',

DefaultActions=[

{

'Type': 'forward',

'TargetGroupArn': 'arn:aws:elasticloadbalancing:region:id:targetgroup/id/id'

},

]

)

response_443 = client.modify_listener(

# This is the HTTPS (port 443) listener

ListenerArn='arn:aws:elasticloadbalancing:region:id:listener/app/alb/id/id',

DefaultActions=[

{

'Type': 'forward',

'TargetGroupArn': 'arn:aws:elasticloadbalancing:region:id:targetgroup/id/id'

},

]

)

print(response_443)

print(response_80)

except Exception as error:

print(error)

After putting it all together. When there are less than 1 health target group members associated with the ALB the alarm is triggered and the default target group will be replaced with the alternate backup member. I hope this helps!

Cheers,

BC